Open Sql*Plus, copy and paste the following contents:

set head off feed off pages 0 serveroutput on

col banner format a72 wrap

select banner

from sys.v_$version;

select ' With the '||parameter||' option'

from sys.v_$option

where value = 'TRUE';

select ' The '||parameter||' option is not installed'

from sys.v_$option

where value <> 'TRUE';

begin

dbms_output.put_line('Port String: '||dbms_utility.port_string);

end;

/

set head on feed on

Thursday, August 28, 2008

Ascii Table

Image taken from http://www.cdrummond.qc.ca/cegep/informat/Professeurs/Alain/files/ascii.htm

The Script to print the Ascii table:

declare

i number;

j number;

k number;

begin

for i in 2..15 loop

for j in 1..16 loop

k:=i*16+j;

dbms_output.put((to_char(k,'000'))||':'||chr(k)||' ');

if k mod 8 = 0 then

dbms_output.put_line('');

end if;

end loop;

end loop;

end;

Wednesday, August 27, 2008

Delete stock locators by using API

Ever wondered on how to delete the stock locators by using Oracle API.

Click here for the complete code..

Click here for the complete code..

How to find the Quantity on hand.

Abstract

This article is how to find the Quantity on hand.

The quantities considered in calculating the

Available quantities are

On hand Quantity --> quantity from mtl_onhand_quantities_details

Allocated Quantity --> mtl_material_transactions_temp

Reserved Quantity --> mtl_reservations

But these table reflects the total of quantity available in the sub inventory without

considering the provision for reserve or allocated quantity.

For Example - Total on hand quantity: 100,

Allocated quantity : 10

and reserved quantity: 20.

let suppose out of 20 reserved quantity, 5 is reserved against the demand source for

which we are performing the transaction right now.

Then the available quantity while transacting the above source is 100-10-20+5 =75.

But in the above tables we will get it as 100 only as not a single unit is went of of the sub inventory.

The relevant form in application is INVMATWB.fmb.

NAVIGATION:

Inventory > On Hand Availability>On-hand quantity --- query the item.

The API, that calculates the available quantities is

inv_quantity_tree_pvt.create_tree.

Author: P Anand Rao

This article is how to find the Quantity on hand.

The quantities considered in calculating the

Available quantities are

On hand Quantity --> quantity from mtl_onhand_quantities_details

Allocated Quantity --> mtl_material_transactions_temp

Reserved Quantity --> mtl_reservations

But these table reflects the total of quantity available in the sub inventory without

considering the provision for reserve or allocated quantity.

For Example - Total on hand quantity: 100,

Allocated quantity : 10

and reserved quantity: 20.

let suppose out of 20 reserved quantity, 5 is reserved against the demand source for

which we are performing the transaction right now.

Then the available quantity while transacting the above source is 100-10-20+5 =75.

But in the above tables we will get it as 100 only as not a single unit is went of of the sub inventory.

The relevant form in application is INVMATWB.fmb.

NAVIGATION:

Inventory > On Hand Availability>On-hand quantity --- query the item.

The API, that calculates the available quantities is

inv_quantity_tree_pvt.create_tree.

Author: P Anand Rao

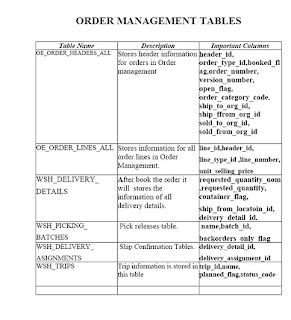

Imp Sales Order Queries

Query to retrive the header information of the Sales Order Form is:

SELECT ooha.header_id, ooha.order_number, ott.NAME "ORDER TYPE",

hp.party_name "CUSTOMER", hca.account_number "CUSTOMER NUMBER",

ooha.ordered_date "DATE ORDERED", qh.NAME "PRICE LIST",

ooha.transactional_curr_code "CURRENCY",

ooha.cust_po_number "CUSTOMER PO",

ooha.freight_carrier_code "SHIPPING METHOD",

ooha.flow_status_code "STATUS", rtt.NAME "PAYMENT TERMS",

mp.organization_code "WARE HOUSE", ol.meaning "FREIGHT TERMS",

ol1.meaning "SHIPMENT PRIORITY", al.meaning "FOB",

rsa.NAME "SALESPERSON",

hcsua.LOCATION

','

hl.address2

','

hl.city

','

hl.state

','

hl.postal_code

','

hl.county "BILL TO LOCATION",

hcsua1.LOCATION

','

hl1.address2

','

hl1.city

','

hl1.state

','

hl1.postal_code

','

hl1.county "SHIP TO LOCATION"

FROM oe_order_headers_all ooha,

oe_transaction_types_tl ott,

qp_list_headers qh,

ra_terms_tl rtt,

mtl_parameters mp,

ra_salesreps_all rsa,

hz_cust_accounts hca,

hz_parties hp,

hz_parties hp1,

hz_locations hl,

hz_locations hl1,

hz_cust_acct_sites_all hcasa,

hz_cust_acct_sites_all hcasa1,

hz_cust_site_uses_all hcsua,

hz_cust_site_uses_all hcsua1,

hz_party_sites hps,

hz_party_sites hps1,

oe_lookups ol,

oe_lookups ol1,

ar_lookups al

WHERE 1 = 1

AND ooha.order_number = 10265

AND ooha.sold_to_org_id = hca.cust_account_id

AND ooha.order_type_id = ott.transaction_type_id

AND ott.LANGUAGE = USERENV ('LANG')

AND rtt.LANGUAGE = USERENV ('LANG')

AND rtt.term_id = ooha.payment_term_id

AND qh.list_header_id = ooha.price_list_id

AND mp.organization_id = ooha.ship_from_org_id

AND ooha.salesrep_id = rsa.salesrep_id

AND hca.party_id = hp.party_id

AND hca.party_id = hp1.party_id

AND ooha.invoice_to_org_id = hcsua.site_use_id(+)

AND hcsua.cust_acct_site_id = hcasa.cust_acct_site_id(+)

AND hcasa.party_site_id = hps.party_site_id(+)

AND hl.location_id(+) = hps.location_id

AND ooha.ship_to_org_id = hcsua1.site_use_id(+)

AND hcsua1.cust_acct_site_id = hcasa1.cust_acct_site_id(+)

AND hcasa1.party_site_id = hps1.party_site_id(+)

AND hl1.location_id(+) = hps1.location_id

AND ooha.freight_terms_code = ol.lookup_code

AND ooha.shipment_priority_code = ol1.lookup_code

AND al.lookup_code = ooha.fob_point_code;

Query to retrieve the line information of the Sales Order Form is:

SELECT oola.line_number "LINE NUMBER", oola.ordered_item "ORDERED ITEM",

oola.ordered_quantity "QTY", oola.order_quantity_uom "UOM",

oola.unit_selling_price "UNIT SELLING PRICE",

oola.cancelled_quantity "QTY CANCELLED",

oola.shipped_quantity "QTY SHIPPED", oola.tax_code "TAX CODE",

ott.NAME "LINE TYPE",

DECODE (opa.line_id,

NULL, DECODE (opa.credit_or_charge_flag,

'C', (-1) * opa.operand,

opa.operand

),

DECODE (opa.credit_or_charge_flag,

'C', DECODE (opa.arithmetic_operator,

'LUMPSUM', (-1) * (opa.operand),

(-1)

* ( oola.ordered_quantity

* opa.adjusted_amount

)

),

DECODE (opa.arithmetic_operator,

'LUMPSUM', opa.operand,

(oola.ordered_quantity * opa.adjusted_amount

)

)

)

) "LINE_CHARGES",

ol.meaning "CALCULATE PRICE FLAG", oola.pricing_quantity,

oola.unit_selling_price, oola.unit_list_price, oola.tax_value,

(oola.shipped_quantity) * (oola.unit_selling_price) "LINE TOTAL"

((oola.shipped_quantity) * (oola.unit_selling_price)

)

+ (DECODE (opa.line_id,

NULL, DECODE (opa.credit_or_charge_flag,

'C', (-1) * opa.operand,

opa.operand

),

DECODE (opa.credit_or_charge_flag,

'C', DECODE (opa.arithmetic_operator,

'LUMPSUM', (-1) * (opa.operand),

(-1)

* ( oola.ordered_quantity

* opa.adjusted_amount

)

),

DECODE (opa.arithmetic_operator,

'LUMPSUM', opa.operand,

(oola.ordered_quantity * opa.adjusted_amount

)

)

)

)

) "ORDER TOTAL"

FROM oe_order_lines_all oola,

oe_transaction_types_tl ott,

oe_price_adjustments opa,

oe_order_headers_all ooha,

oe_lookups ol

WHERE 1 = 1

AND oola.line_type_id = ott.transaction_type_id

AND opa.header_id = ooha.header_id

AND opa.line_id = oola.line_id(+)

AND opa.list_line_type_code = 'FREIGHT_CHARGE'

AND opa.applied_flag = 'Y'

AND ott.LANGUAGE = USERENV ('LANG')

AND oola.header_id = 1547

AND ol.lookup_type = 'CALCULATE_PRICE_FLAG'

AND oola.calculate_price_flag = ol.lookup_code;

SELECT ooha.header_id, ooha.order_number, ott.NAME "ORDER TYPE",

hp.party_name "CUSTOMER", hca.account_number "CUSTOMER NUMBER",

ooha.ordered_date "DATE ORDERED", qh.NAME "PRICE LIST",

ooha.transactional_curr_code "CURRENCY",

ooha.cust_po_number "CUSTOMER PO",

ooha.freight_carrier_code "SHIPPING METHOD",

ooha.flow_status_code "STATUS", rtt.NAME "PAYMENT TERMS",

mp.organization_code "WARE HOUSE", ol.meaning "FREIGHT TERMS",

ol1.meaning "SHIPMENT PRIORITY", al.meaning "FOB",

rsa.NAME "SALESPERSON",

hcsua.LOCATION

','

hl.address2

','

hl.city

','

hl.state

','

hl.postal_code

','

hl.county "BILL TO LOCATION",

hcsua1.LOCATION

','

hl1.address2

','

hl1.city

','

hl1.state

','

hl1.postal_code

','

hl1.county "SHIP TO LOCATION"

FROM oe_order_headers_all ooha,

oe_transaction_types_tl ott,

qp_list_headers qh,

ra_terms_tl rtt,

mtl_parameters mp,

ra_salesreps_all rsa,

hz_cust_accounts hca,

hz_parties hp,

hz_parties hp1,

hz_locations hl,

hz_locations hl1,

hz_cust_acct_sites_all hcasa,

hz_cust_acct_sites_all hcasa1,

hz_cust_site_uses_all hcsua,

hz_cust_site_uses_all hcsua1,

hz_party_sites hps,

hz_party_sites hps1,

oe_lookups ol,

oe_lookups ol1,

ar_lookups al

WHERE 1 = 1

AND ooha.order_number = 10265

AND ooha.sold_to_org_id = hca.cust_account_id

AND ooha.order_type_id = ott.transaction_type_id

AND ott.LANGUAGE = USERENV ('LANG')

AND rtt.LANGUAGE = USERENV ('LANG')

AND rtt.term_id = ooha.payment_term_id

AND qh.list_header_id = ooha.price_list_id

AND mp.organization_id = ooha.ship_from_org_id

AND ooha.salesrep_id = rsa.salesrep_id

AND hca.party_id = hp.party_id

AND hca.party_id = hp1.party_id

AND ooha.invoice_to_org_id = hcsua.site_use_id(+)

AND hcsua.cust_acct_site_id = hcasa.cust_acct_site_id(+)

AND hcasa.party_site_id = hps.party_site_id(+)

AND hl.location_id(+) = hps.location_id

AND ooha.ship_to_org_id = hcsua1.site_use_id(+)

AND hcsua1.cust_acct_site_id = hcasa1.cust_acct_site_id(+)

AND hcasa1.party_site_id = hps1.party_site_id(+)

AND hl1.location_id(+) = hps1.location_id

AND ooha.freight_terms_code = ol.lookup_code

AND ooha.shipment_priority_code = ol1.lookup_code

AND al.lookup_code = ooha.fob_point_code;

Query to retrieve the line information of the Sales Order Form is:

SELECT oola.line_number "LINE NUMBER", oola.ordered_item "ORDERED ITEM",

oola.ordered_quantity "QTY", oola.order_quantity_uom "UOM",

oola.unit_selling_price "UNIT SELLING PRICE",

oola.cancelled_quantity "QTY CANCELLED",

oola.shipped_quantity "QTY SHIPPED", oola.tax_code "TAX CODE",

ott.NAME "LINE TYPE",

DECODE (opa.line_id,

NULL, DECODE (opa.credit_or_charge_flag,

'C', (-1) * opa.operand,

opa.operand

),

DECODE (opa.credit_or_charge_flag,

'C', DECODE (opa.arithmetic_operator,

'LUMPSUM', (-1) * (opa.operand),

(-1)

* ( oola.ordered_quantity

* opa.adjusted_amount

)

),

DECODE (opa.arithmetic_operator,

'LUMPSUM', opa.operand,

(oola.ordered_quantity * opa.adjusted_amount

)

)

)

) "LINE_CHARGES",

ol.meaning "CALCULATE PRICE FLAG", oola.pricing_quantity,

oola.unit_selling_price, oola.unit_list_price, oola.tax_value,

(oola.shipped_quantity) * (oola.unit_selling_price) "LINE TOTAL"

((oola.shipped_quantity) * (oola.unit_selling_price)

)

+ (DECODE (opa.line_id,

NULL, DECODE (opa.credit_or_charge_flag,

'C', (-1) * opa.operand,

opa.operand

),

DECODE (opa.credit_or_charge_flag,

'C', DECODE (opa.arithmetic_operator,

'LUMPSUM', (-1) * (opa.operand),

(-1)

* ( oola.ordered_quantity

* opa.adjusted_amount

)

),

DECODE (opa.arithmetic_operator,

'LUMPSUM', opa.operand,

(oola.ordered_quantity * opa.adjusted_amount

)

)

)

)

) "ORDER TOTAL"

FROM oe_order_lines_all oola,

oe_transaction_types_tl ott,

oe_price_adjustments opa,

oe_order_headers_all ooha,

oe_lookups ol

WHERE 1 = 1

AND oola.line_type_id = ott.transaction_type_id

AND opa.header_id = ooha.header_id

AND opa.line_id = oola.line_id(+)

AND opa.list_line_type_code = 'FREIGHT_CHARGE'

AND opa.applied_flag = 'Y'

AND ott.LANGUAGE = USERENV ('LANG')

AND oola.header_id = 1547

AND ol.lookup_type = 'CALCULATE_PRICE_FLAG'

AND oola.calculate_price_flag = ol.lookup_code;

Tuesday, August 26, 2008

Steps to enable/display the Legal Disclaimer page and text in Employee Self Service

Responsibility: Global Benefits Manager

1. Navigation: Total Compensation : General Definitions : Additional Setup : Communication Types

2. Query for "Legal Disclaimer"

3. Select 'To Be Sent' Code of "Not Applicable"

4. In Usage field select 'Self Service Instruction Text'

5. Save record.

6. Click Usages

7. Note: In the Legal Disclaimer we cannot specify any Communication Usages, such as Life Event, Program, Plan, or Rule, to limit the display of the instruction text that we write. So all these fields should be left blank.

8. Enter the disclaimer text in the 'Self Service Instruction Text' field.

1. Navigation: Total Compensation : General Definitions : Additional Setup : Communication Types

2. Query for "Legal Disclaimer"

3. Select 'To Be Sent' Code of "Not Applicable"

4. In Usage field select 'Self Service Instruction Text'

5. Save record.

6. Click Usages

7. Note: In the Legal Disclaimer we cannot specify any Communication Usages, such as Life Event, Program, Plan, or Rule, to limit the display of the instruction text that we write. So all these fields should be left blank.

8. Enter the disclaimer text in the 'Self Service Instruction Text' field.

XML Sql Calls Concept

The XML Publisher data engine enables you to rapidly generate any kind of XML data structure against the Oracle database in a scalable, efficient manner. The data template is the method by which you communicate your request for data to the data engine. It is an XML document whose elements collectively define how the data engine will process the template to generate the XML...

Click here to read the complete article on XML Sql Calls Concept

Click here to read the complete article on XML Sql Calls Concept

Monday, August 25, 2008

How to get the create statement of sql view

SELECT owner "view owner name", view_name "view name"

FROM all_views

WHERE view_name = '<< YOURVIEW >>'

SELECT DBMS_METADATA.get_ddl ('VIEW', '<< view name >>',

'<< view owner name >>')

FROM DUAL

FROM all_views

WHERE view_name = '<< YOURVIEW >>'

SELECT DBMS_METADATA.get_ddl ('VIEW', '<< view name >>',

'<< view owner name >>')

FROM DUAL

Spool data from sql*plus to a .csv file

We need to login the sql*plus, create an empty 'test.xls' file in the 'c:' directory and then type the below statements in sql*plus.

SET ECHO OFF

SET FEEDBACK OFF

SET HEADING OFF

SET LINESIZE 32000

SET PAGESIZE 0

SET TERMOUT OFF

SET TRIMSPOOL ON

spool c:\test.csv

PROMPT employeename,emp_id

select employeename||','||emp_id

from employees;

SPOOL OFF

SET ECHO ON

SET FEEDBACK ON

SET HEADING ON

SET LINESIZE 80

SET PAGESIZE 24

SET TERMOUT ON

SET TRIMSPOOL OFF

to spool data into .csv file

SET ECHO OFF

SET FEEDBACK OFF

SET HEADING OFF

SET LINESIZE 32000

SET PAGESIZE 0

SET TERMOUT OFF

SET TRIMSPOOL ON

spool c:\test.csv

PROMPT employeename,emp_id

select employeename||','||emp_id

from employees;

SPOOL OFF

SET ECHO ON

SET FEEDBACK ON

SET HEADING ON

SET LINESIZE 80

SET PAGESIZE 24

SET TERMOUT ON

SET TRIMSPOOL OFF

to spool data into .csv file

Query to extract all application users and associated privileges information

SELECT UNIQUE SUBSTR (u.user_name, 1, 30) user_name,

SUBSTR (r.responsibility_name, 1, 60) responsiblity,

SUBSTR (a.application_name, 1, 50) application

FROM fnd_user u,

fnd_user_resp_groups g,

fnd_application_tl a,

fnd_responsibility_tl r

WHERE g.user_id(+) = u.user_id

AND g.responsibility_application_id = a.application_id

AND a.application_id = r.application_id

AND g.responsibility_id = r.responsibility_id

AND u.user_name = '&USER'

ORDER BY SUBSTR (user_name, 1, 30),

SUBSTR (a.application_name, 1, 50),

SUBSTR (r.responsibility_name, 1, 60)

SUBSTR (r.responsibility_name, 1, 60) responsiblity,

SUBSTR (a.application_name, 1, 50) application

FROM fnd_user u,

fnd_user_resp_groups g,

fnd_application_tl a,

fnd_responsibility_tl r

WHERE g.user_id(+) = u.user_id

AND g.responsibility_application_id = a.application_id

AND a.application_id = r.application_id

AND g.responsibility_id = r.responsibility_id

AND u.user_name = '&USER'

ORDER BY SUBSTR (user_name, 1, 30),

SUBSTR (a.application_name, 1, 50),

SUBSTR (r.responsibility_name, 1, 60)

Script to get the invoice and supplier details

SELECT ai.invoice_id, ai.invoice_num, ai.invoice_type_lookup_code,

ai.invoice_date, (SELECT segment1

FROM po_headers_all

WHERE po_header_id = ai.po_header_id) po_number,

ai.vendor_id, pv.segment1 vendor_number, pv.vendor_name,

ai.vendor_site_id, pvs.vendor_site_code,

( NVL (ai.invoice_amount, 0)

- NVL (ai.amount_paid, 0)

+ NVL (ai.discount_amount_taken, 0)

) remaining_amount,

ai.invoice_currency_code, ai.exchange_rate, ai.exchange_rate_type,

ai.exchange_date, ai.terms_id, (SELECT NAME

FROM ap_terms

WHERE term_id = ai.terms_id) terms,

ai.description, ai.awt_group_id,

(SELECT awt.NAME

FROM ap_awt_groups awt

WHERE awt.GROUP_ID = ai.awt_group_id) awt_group, ai.last_update_date,

ai.last_updated_by, ai.last_update_login, ai.creation_date,

ai.created_by, ai.attribute_category, ai.attribute1, ai.attribute2,

ai.attribute3, ai.attribute4, ai.attribute5, ai.attribute6,

ai.attribute7, ai.attribute8, ai.attribute9, ai.attribute10,

ai.attribute11, ai.attribute12, ai.attribute13, ai.attribute14,

ai.attribute15, ai.global_attribute_category, ai.global_attribute1,

ai.global_attribute2, ai.global_attribute3, ai.global_attribute4,

ai.global_attribute5, ai.global_attribute6, ai.global_attribute7,

ai.global_attribute8, ai.global_attribute9, ai.global_attribute10,

ai.global_attribute11, ai.global_attribute12, ai.global_attribute13,

ai.global_attribute14, ai.global_attribute15, ai.global_attribute16,

ai.global_attribute17, ai.global_attribute18, ai.global_attribute19,

ai.global_attribute20, ai.payment_cross_rate_type,

ai.payment_cross_rate_date, ai.payment_cross_rate,

ai.payment_currency_code, ai.doc_category_code, ai.voucher_num,

ai.payment_method_lookup_code, ai.pay_group_lookup_code,

ai.goods_received_date, ai.invoice_received_date, ai.gl_date,

ai.accts_pay_code_combination_id, ai.ussgl_transaction_code,

ai.exclusive_payment_flag, ai.org_id, ai.amount_applicable_to_discount,

pvs.email_address, ai.terms_date, ai.requester_id

FROM ap_invoices_all ai, po_vendors pv, po_vendor_sites_all pvs

WHERE 1 = 1

AND ai.vendor_id = pv.vendor_id

AND pvs.vendor_id = pv.vendor_id

AND ai.vendor_site_id = pvs.vendor_site_id

ai.invoice_date, (SELECT segment1

FROM po_headers_all

WHERE po_header_id = ai.po_header_id) po_number,

ai.vendor_id, pv.segment1 vendor_number, pv.vendor_name,

ai.vendor_site_id, pvs.vendor_site_code,

( NVL (ai.invoice_amount, 0)

- NVL (ai.amount_paid, 0)

+ NVL (ai.discount_amount_taken, 0)

) remaining_amount,

ai.invoice_currency_code, ai.exchange_rate, ai.exchange_rate_type,

ai.exchange_date, ai.terms_id, (SELECT NAME

FROM ap_terms

WHERE term_id = ai.terms_id) terms,

ai.description, ai.awt_group_id,

(SELECT awt.NAME

FROM ap_awt_groups awt

WHERE awt.GROUP_ID = ai.awt_group_id) awt_group, ai.last_update_date,

ai.last_updated_by, ai.last_update_login, ai.creation_date,

ai.created_by, ai.attribute_category, ai.attribute1, ai.attribute2,

ai.attribute3, ai.attribute4, ai.attribute5, ai.attribute6,

ai.attribute7, ai.attribute8, ai.attribute9, ai.attribute10,

ai.attribute11, ai.attribute12, ai.attribute13, ai.attribute14,

ai.attribute15, ai.global_attribute_category, ai.global_attribute1,

ai.global_attribute2, ai.global_attribute3, ai.global_attribute4,

ai.global_attribute5, ai.global_attribute6, ai.global_attribute7,

ai.global_attribute8, ai.global_attribute9, ai.global_attribute10,

ai.global_attribute11, ai.global_attribute12, ai.global_attribute13,

ai.global_attribute14, ai.global_attribute15, ai.global_attribute16,

ai.global_attribute17, ai.global_attribute18, ai.global_attribute19,

ai.global_attribute20, ai.payment_cross_rate_type,

ai.payment_cross_rate_date, ai.payment_cross_rate,

ai.payment_currency_code, ai.doc_category_code, ai.voucher_num,

ai.payment_method_lookup_code, ai.pay_group_lookup_code,

ai.goods_received_date, ai.invoice_received_date, ai.gl_date,

ai.accts_pay_code_combination_id, ai.ussgl_transaction_code,

ai.exclusive_payment_flag, ai.org_id, ai.amount_applicable_to_discount,

pvs.email_address, ai.terms_date, ai.requester_id

FROM ap_invoices_all ai, po_vendors pv, po_vendor_sites_all pvs

WHERE 1 = 1

AND ai.vendor_id = pv.vendor_id

AND pvs.vendor_id = pv.vendor_id

AND ai.vendor_site_id = pvs.vendor_site_id

Glasses icon in AR Transaction Form

User asked:

In the Transaction window of AR inquiry responsibility,the button(glasses icon)which is next to the transaction number is not giving output after completing the transaction.

Can anyone give idea about profile option setting for getting the functionality of icon which is next to transaction number of AR inquiry transaction window

We replied:

The Grant box is unchecked for function 'Bill Management: Real Preview ' which is been attached to the AR_NAVIGATE_GUI menu.

Inorder to resolve this issue we need to check grant check box for function 'Bill Management: Real Preview ' which is attached to the AR_NAVIGATE_GUI menu and then recompile the menu.

We need to make sure that AR_NAVIGATE_GUI menu is attached to the Receivables responsibility and OIR: Bill Presentment Architecture Enabled profile option is set to Yes in Sysadmin at the Site level and Responsibility level.

In the Transaction window of AR inquiry responsibility,the button(glasses icon)which is next to the transaction number is not giving output after completing the transaction.

Can anyone give idea about profile option setting for getting the functionality of icon which is next to transaction number of AR inquiry transaction window

We replied:

The Grant box is unchecked for function 'Bill Management: Real Preview ' which is been attached to the AR_NAVIGATE_GUI menu.

Inorder to resolve this issue we need to check grant check box for function 'Bill Management: Real Preview ' which is attached to the AR_NAVIGATE_GUI menu and then recompile the menu.

We need to make sure that AR_NAVIGATE_GUI menu is attached to the Receivables responsibility and OIR: Bill Presentment Architecture Enabled profile option is set to Yes in Sysadmin at the Site level and Responsibility level.

Quickest way to generate the columns used in the ctl file

Select decode (column_id, 1, ' ', ' , ') ||

rpad (column_name, 33, ' ') ||

decode (data_type,

'VARCHAR2', 'CHAR NULLIF ('||column_name||'=BLANKS)',

'FLOAT', 'DECIMAL EXTERNAL NULLIF('||column_name||'=BLANKS)',

'NUMBER', decode (data_precision, 0,

'INTEGER EXTERNAL NULLIF ('||column_name||

'=BLANKS)', decode (data_scale, 0,

'INTEGER EXTERNAL NULLIF ('||

column_name||'=BLANKS)',

'DECIMAL EXTERNAL NULLIF ('||

column_name||'=BLANKS)')),

'DATE', 'DATE "mm/dd/yy" NULLIF ('||

column_name||'=BLANKS)', null)

from all_tab_columns

where table_name = upper ('$LOADTABLE')

and owner = upper ('$SCHEMA')

order by column_id;

Note:

1. In the $LOADTABLE pass the table to which you want to SQL the data

2. In the $SCHEMA pass the schema in which the table is located.

rpad (column_name, 33, ' ') ||

decode (data_type,

'VARCHAR2', 'CHAR NULLIF ('||column_name||'=BLANKS)',

'FLOAT', 'DECIMAL EXTERNAL NULLIF('||column_name||'=BLANKS)',

'NUMBER', decode (data_precision, 0,

'INTEGER EXTERNAL NULLIF ('||column_name||

'=BLANKS)', decode (data_scale, 0,

'INTEGER EXTERNAL NULLIF ('||

column_name||'=BLANKS)',

'DECIMAL EXTERNAL NULLIF ('||

column_name||'=BLANKS)')),

'DATE', 'DATE "mm/dd/yy" NULLIF ('||

column_name||'=BLANKS)', null)

from all_tab_columns

where table_name = upper ('$LOADTABLE')

and owner = upper ('$SCHEMA')

order by column_id;

Note:

1. In the $LOADTABLE pass the table to which you want to SQL the data

2. In the $SCHEMA pass the schema in which the table is located.

How to restrict Who columns from being updated in HRMS

The "last_update_date" and "last_updated_by" fields are for audit purpose and are automatically used by the APIs.

set the parameter hr_general.g_data_migrator_mode = Y to disable entering who columns before your script.

Note: Although you can disable the ROW WHO columns from being updated as indicated, this will potentially cause an auditing nightmare if the batch update system updates incorrect data or fields. In such a case, the last person who updated the row thru the application pages or forms (i.e in a valid manner) might be accused of trickery :).

Please make sure that you test it in your test instance before moving to PROD.

set the parameter hr_general.g_data_migrator_mode = Y to disable entering who columns before your script.

Note: Although you can disable the ROW WHO columns from being updated as indicated, this will potentially cause an auditing nightmare if the batch update system updates incorrect data or fields. In such a case, the last person who updated the row thru the application pages or forms (i.e in a valid manner) might be accused of trickery :).

Please make sure that you test it in your test instance before moving to PROD.

p_datetrack_update_mode in HRMS

DateTrack History is available in most windows where you can enter date tracked information. DateTrack History enables you to track changes made to records and fields, and by whom. DateTrack adds the dimension of time to an application's database. The value of a DateTracked record depends on the date from which you are viewing the data. Forexample, querying an employee's annual salary with an effective date of 12-JUL-1992might give a different value than a query with an effective date of 01-DEC-1992. However, the application and the user see the employee's pay as a single record.

A DateTracked (DT) record is what the application and the user see: a single DT record for each key value. However, this DT record may change over time, so it may correspond to one or more physical rows in the database. The history for the record is held by storing a row when the record is created, and an extra row every time the record changes. To control these rows, every DateTracked table must include these columns:

EFFECTIVE_START_DATE DATE NOT NULL

EFFECTIVE_END_DATE DATE NOT NULL

The effective start date indicates when the record was inserted. The effective end date indicates when the record was deleted or updated. A deleted record has the highest end date of all the rows with that key, but for an updated record there will be at least one row for this key with a higher effective end date.

As time support is not provided, the effective start date commences at 0000 hours and the effective end date finishes at 2359 hours. This means that a DT record can change at most once per day.

There are two values we can pass when we try to update or load an employee information using the p_datetrack_update_mode and both these have there own advantages and disadvantages.

1. Update: This will end date the already existing entry in the base table and then create a new entry with the start date as sysdate. Updated values are written to the database as a new row, effective from today until 31-DEC-4712. The old values remain effective up to and including yesterday.

Adv: This will keep track of your history data and at some point if you want to check your previous data it would be very helpfull. The updated values override the old record values and inherit the same effective dates.

Disadv: Creates as many number of records as we perform the updates on the record and this will increase the data in the base tables.

2. Correction: This will only update the relevant columns that is passed to the API.

Adv: Doesnot create any record while performing the updates on the record hence reducing the content of data in the base tables.

Disadv: Doesnot keep track of your history data.

A DateTracked (DT) record is what the application and the user see: a single DT record for each key value. However, this DT record may change over time, so it may correspond to one or more physical rows in the database. The history for the record is held by storing a row when the record is created, and an extra row every time the record changes. To control these rows, every DateTracked table must include these columns:

EFFECTIVE_START_DATE DATE NOT NULL

EFFECTIVE_END_DATE DATE NOT NULL

The effective start date indicates when the record was inserted. The effective end date indicates when the record was deleted or updated. A deleted record has the highest end date of all the rows with that key, but for an updated record there will be at least one row for this key with a higher effective end date.

As time support is not provided, the effective start date commences at 0000 hours and the effective end date finishes at 2359 hours. This means that a DT record can change at most once per day.

There are two values we can pass when we try to update or load an employee information using the p_datetrack_update_mode and both these have there own advantages and disadvantages.

1. Update: This will end date the already existing entry in the base table and then create a new entry with the start date as sysdate. Updated values are written to the database as a new row, effective from today until 31-DEC-4712. The old values remain effective up to and including yesterday.

Adv: This will keep track of your history data and at some point if you want to check your previous data it would be very helpfull. The updated values override the old record values and inherit the same effective dates.

Disadv: Creates as many number of records as we perform the updates on the record and this will increase the data in the base tables.

2. Correction: This will only update the relevant columns that is passed to the API.

Adv: Doesnot create any record while performing the updates on the record hence reducing the content of data in the base tables.

Disadv: Doesnot keep track of your history data.

Shameem Bauccha has explained quite well about the datetrack in his blog. Please refer to his blog at: http://oracledocs.wordpress.com/2009/06/22/hrms-date-track/

Labels:

Datetrack,

p_datetrack_update_mode in HRMS

Sunday, August 24, 2008

AP Source Setup

For the AP Source Setup use the following navigation:

AP Responsibility -> Setup -> Lookups -> Payables. Query for the type 'SOURCE' and add the desired source in the line.

AP Responsibility -> Setup -> Lookups -> Payables. Query for the type 'SOURCE' and add the desired source in the line.

Friday, August 22, 2008

A comparative analysis between SQL*LOADER and UTL_FILE utility

In implementing new systems we come across problems of importing "alien" data. This may be coming from a legacy system or an on-going system. This data is transported via extract files from the legacy system to the Oracle system. The gateway to Oracle for this data is SQL*Loader and data is loaded into tables via a control script into tables.

Typically, the older systems do not have very normalized data, nor have they been operating with fully implemented database constraints. The lack of constraints over the years in legacy system can lead to bad data that has crept in. Therefore, while bringing external data into oracle system we need a refined set of checks and balances to ensure that we get good data. This requires a lot of programmatic control in the process of data-loading.

The approach applied in case of SQL* Loader is as follows :

1. Load the data into temporary tables via SQL*Loader via control file and make the data native to ORACLE.

2. Write a PL/SQL program to do the processing.

3. Load the data into live tables.

This approach has a lot of dependencies as well as a strong lack of integration of steps and programmatic control. To overcome this, we have analyzed another facility in that has been release Oracle 7.3.x onwards. It is called the UTL_FILE package. With some creative use of this package we can achieve whatever SQL*LOADER offers and in addition to that do some high level validation and complex data loading. In the following discussion a study of two tools is done.

A BRIEF OVERVIEW OF SQL*Loader:

SQL*Loader is a server utility for loading data from external data files into Oracle database. The basic advantage of using SQL*Loader is for simple loads and fast loading of data. It can load data into myriad data formats, perform elementary filtering, load data into multiple tables, and create one logical record from one or more physical records.

It creates a detailed log file, a bad file that contains rejected records and a discard file to hold the records that are selectively not loaded. The tool is executed from a command line and a username and password and the control file name and location are required to run it.

A BRIEF OVERVIEW OF UTL_FILE:

PL/SQL does not have text file input output capabilities but acquires it via UTL_FILE package. It provides rudimentary utility for reading ( as well as writing) files from within a PL/SQL program. The lines in the file are read sequentially and hence it effects the performance of the program.

The UTL_FILE package can be wrapped around with a PL/SQL program and since this package is integrated with PL/SQL it provides us the tremendous ability for flexing our "programming muscles." Some procedures and functions can be added to this wrapper program that serve as a handy "tool" for doing normal file reading operations. With this approach we can achieve whatever SQL*Loader can do and much more. The security mechanism for UTL_FILE is achieved by defining a parameter in INIT.ora file called utl_file_dir parameter. The directories that UTL_FILE can read from and write to need to have permissions of Oracle instance owner and the user running the package.

CONCLUSIONS:

The comparative analysis of SQL*Loader and UTL_FILE reveals that these tools are suitable to our environment subject to the conditions of our needs.

If the data load is complex (as is the case in relational databases) UTL_FILE seems to be the tool of choice. This tool does require programmatic effort in terms of writing a wrapper package but the subsequent effort in this direction is greatly reduced once the initial tool kit is built for your environment.

The UTL_FILE tightly integrates the file input with the programmatic control and the data manipulation inside a single PL/SQL unit. There are disadvantages of speed in loading in case of UTL_FILE but these are totally offset by the programmatic control it offers and the integration it brings in.

Thus we find that UTL_FILE tool bridges the gap left by SQL*Loader for complex data loads.

Typically, the older systems do not have very normalized data, nor have they been operating with fully implemented database constraints. The lack of constraints over the years in legacy system can lead to bad data that has crept in. Therefore, while bringing external data into oracle system we need a refined set of checks and balances to ensure that we get good data. This requires a lot of programmatic control in the process of data-loading.

The approach applied in case of SQL* Loader is as follows :

1. Load the data into temporary tables via SQL*Loader via control file and make the data native to ORACLE.

2. Write a PL/SQL program to do the processing.

3. Load the data into live tables.

This approach has a lot of dependencies as well as a strong lack of integration of steps and programmatic control. To overcome this, we have analyzed another facility in that has been release Oracle 7.3.x onwards. It is called the UTL_FILE package. With some creative use of this package we can achieve whatever SQL*LOADER offers and in addition to that do some high level validation and complex data loading. In the following discussion a study of two tools is done.

A BRIEF OVERVIEW OF SQL*Loader:

SQL*Loader is a server utility for loading data from external data files into Oracle database. The basic advantage of using SQL*Loader is for simple loads and fast loading of data. It can load data into myriad data formats, perform elementary filtering, load data into multiple tables, and create one logical record from one or more physical records.

It creates a detailed log file, a bad file that contains rejected records and a discard file to hold the records that are selectively not loaded. The tool is executed from a command line and a username and password and the control file name and location are required to run it.

A BRIEF OVERVIEW OF UTL_FILE:

PL/SQL does not have text file input output capabilities but acquires it via UTL_FILE package. It provides rudimentary utility for reading ( as well as writing) files from within a PL/SQL program. The lines in the file are read sequentially and hence it effects the performance of the program.

The UTL_FILE package can be wrapped around with a PL/SQL program and since this package is integrated with PL/SQL it provides us the tremendous ability for flexing our "programming muscles." Some procedures and functions can be added to this wrapper program that serve as a handy "tool" for doing normal file reading operations. With this approach we can achieve whatever SQL*Loader can do and much more. The security mechanism for UTL_FILE is achieved by defining a parameter in INIT

CONCLUSIONS:

The comparative analysis of SQL*Loader and UTL_FILE reveals that these tools are suitable to our environment subject to the conditions of our needs.

If the data load is complex (as is the case in relational databases) UTL_FILE seems to be the tool of choice. This tool does require programmatic effort in terms of writing a wrapper package but the subsequent effort in this direction is greatly reduced once the initial tool kit is built for your environment.

The UTL_FILE tightly integrates the file input with the programmatic control and the data manipulation inside a single PL/SQL unit. There are disadvantages of speed in loading in case of UTL_FILE but these are totally offset by the programmatic control it offers and the integration it brings in.

Thus we find that UTL_FILE tool bridges the gap left by SQL*Loader for complex data loads.

Script to create an Oracle directory entry in dba_directories table

Any file in any directory accessible to the user can be attached (not just the directories listed for the utl_file_dir parameter in the init.ora file).

HOWEVER, if we are using this to send e-mail's with attached files (or reading the message text or message html from a file) from a trigger or from some other SQL that you can't or don't want to have a commit done, you will first need to create an Oracle directory entry for the directory containing the attached files and grant read access to it to public, such as:

DECLARE

l_directory_name VARCHAR2 (2000);

l_directory_path VARCHAR2 (2000);

BEGIN

l_directory_name := 'CESDIR072917';

l_directory_path :=

'/dba/u01/app/applmgr/common/xxx/admin/out/xxx';

EXECUTE IMMEDIATE 'create or replace directory '

|| l_directory_name

|| ' as '''

|| l_directory_path

|| '''';

EXECUTE IMMEDIATE 'grant read on directory '

|| l_directory_name

|| ' to public';

COMMIT;

END;

/

In this script the local variable l_directory_name and l_directory_path are used to define the directory name and the directory path from which you want to access the file respectively.

HOWEVER, if we are using this to send e-mail's with attached files (or reading the message text or message html from a file) from a trigger or from some other SQL that you can't or don't want to have a commit done, you will first need to create an Oracle directory entry for the directory containing the attached files and grant read access to it to public, such as:

DECLARE

l_directory_name VARCHAR2 (2000);

l_directory_path VARCHAR2 (2000);

BEGIN

l_directory_name := 'CESDIR072917';

l_directory_path :=

'/dba/u01/app/applmgr/common/xxx/admin/out/xxx';

EXECUTE IMMEDIATE 'create or replace directory '

|| l_directory_name

|| ' as '''

|| l_directory_path

|| '''';

EXECUTE IMMEDIATE 'grant read on directory '

|| l_directory_name

|| ' to public';

COMMIT;

END;

/

In this script the local variable l_directory_name and l_directory_path are used to define the directory name and the directory path from which you want to access the file respectively.

Important Base tables for HRMS

Some of the very important base tables in HRMS are as follows:

1. per_all_people_f

This table will provide us the very basic information about the employee. The very data from the first screen we see when we open the 'People -> Enter and Maintain' form goes into this table.

2. per_all_assignments_f

This table will store all the information which is been entered in the employee assignment form.

3. per_addresses

This table will store all the information which is been entered in the employee address form.

4. per_pay_proposals

This table will store all the information which is been entered in the employee salary form.

5. per_person_types_tl

This table is used to find the type of the employee. This table is linked with the per_all_people_f with the person_type_id to find out the type of person.

6. per_jobs_tl

This table will contain the various types of JOBS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct job name from the employee.

7. per_grades_tl

This table will contain the various types of GRADES in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct grade name from the employee.

8. hr_locations_all

This table will contain the various LOCATIONS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct location name from the employee.

9. pay_all_payrolls_f

This table will contain the various types of PAYROLLS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct payroll name from the employee.

10. per_pay_bases

This table will contain the various types of PAY BASES in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct pay basis name from the employee.

11. per_assignment_status_types_tl

This table will contain the various types of assignment types in oracle. The assignment types generally would be 'Active Assignment' etc.. This table is been linked with the per_all_assignments_f table to retrieve the correct job name from the employee.

12. per_person_type_usages_f

This table will store the correct person type of the particluar employee. We should never depend on the person type present in the per_all_people_f table. Instead we need to link the person_id with this table and get the correct person type.

Please navigate to the below link for complete query to extract the employee data from Oracle.

http://arunrathod.blogspot.com/2008/08/hrms-employee-extraction-query.html

1. per_all_people_f

This table will provide us the very basic information about the employee. The very data from the first screen we see when we open the 'People -> Enter and Maintain' form goes into this table.

2. per_all_assignments_f

This table will store all the information which is been entered in the employee assignment form.

3. per_addresses

This table will store all the information which is been entered in the employee address form.

4. per_pay_proposals

This table will store all the information which is been entered in the employee salary form.

5. per_person_types_tl

This table is used to find the type of the employee. This table is linked with the per_all_people_f with the person_type_id to find out the type of person.

6. per_jobs_tl

This table will contain the various types of JOBS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct job name from the employee.

7. per_grades_tl

This table will contain the various types of GRADES in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct grade name from the employee.

8. hr_locations_all

This table will contain the various LOCATIONS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct location name from the employee.

9. pay_all_payrolls_f

This table will contain the various types of PAYROLLS in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct payroll name from the employee.

10. per_pay_bases

This table will contain the various types of PAY BASES in oracle. This table is been linked with the per_all_assignments_f table to retrieve the correct pay basis name from the employee.

11. per_assignment_status_types_tl

This table will contain the various types of assignment types in oracle. The assignment types generally would be 'Active Assignment' etc.. This table is been linked with the per_all_assignments_f table to retrieve the correct job name from the employee.

12. per_person_type_usages_f

This table will store the correct person type of the particluar employee. We should never depend on the person type present in the per_all_people_f table. Instead we need to link the person_id with this table and get the correct person type.

Please navigate to the below link for complete query to extract the employee data from Oracle.

http://arunrathod.blogspot.com/2008/08/hrms-employee-extraction-query.html

Wednesday, August 20, 2008

Oracle Code Tuning Tips

Performance tuning of code has always been the most difficult area of Oracle development. In fact, some developers say that tuning an unstructured Oracle code is like trying to work on a car while it's flying down the highway at 60 mph!.

Most Oracle experts generally use a top-down approach for tuning. This means that you start at a very high level, taking a look at the overall design of the code, and then successively drill down into more detail as you begin to tune the individual components within the code.

The goal of this article is to give you the general tools required to ensure that your Oracle code is performing at an optimal level. Although the article won't make you an expert, they'll give you a solid understanding of all the important Oracle tuning issues.

First of all, you have to know why it is slow. What is the real cause of your problem. If the reason why is not known, suggesting to rewrite the query, or hinting the query, suggesting parallellization is not very productive. Once in a while you may get lucky. But even then you have to realize that if your problem seems "solved", but you don't know why, nothing guarantees you that the problem won't come back tomorrow. So the first step should always be to investigate the root cause.

Some of the tools at your disposal are:

- dbms_profiler

- explain plan

- SQL*Trace / tkprof

dbms_profiler

Use dbms_profiler if you want to know where time is being spent in PL/SQL code. Statspack is a must if you are a dba and want to know what is going on in your entire database. For a single query or a small process, explain plan and SQL*Trace and tkprof are your tools.

Explain Plan

This is one of the best tip. When we find that there is a performance issue with the query we need to break the query into smaller chunks. This approach is best called as 'Divide and Rule'. To implement this we need to type the following in SQL*Plus:

explain plan for

select * from table(dbms_xplan.display);

When you get error messages or a message complaining about an old version of plan_table, make sure you run the script utlxplan.sql.

The output you get here basically shows you what the cost based optimizer expects. It gives you an idea on why the cost based optimizer chooses an access path.

SQL*Trace/tkprof

For this you have to type in SQL*Plus:

- alter session set sql_trace true;

-

- disconnect (this step is important, because it ensures all cursors get closed, and "row source operation" is generated)

- identify your trace file in the server directory as specified in the parameter user_dump_dest

- on your operating system: tkprof a.txt sys=no

The file a.txt will now give you valuable information on what has actually happened. No predictions but the truth.

By comparing the output from explain plan with the output from tkprof, you are able to identify the possible problem areas.

A note about database design and performance

The initial design of the Oracle tables and indexes is the single most critical factor in overall performance—and unfortunately, the design can rarely be changed once the system is placed into production use. So while the tuning techniques we'll be discussing can help you maximize the efficiency of your database engine, bear in mind that the initial design is the most important performance factor.

When a database is initially analyzed, the designer will often apply the normalization rules developed by E. F. Codd and C. J. Date. Their normalization study resulted in a set of table definitions that made it easier to design tables with controlled redundancy.

In the 1970s, database redundancy was difficult and expensive. As a result, database designers were taught to create databases in Third Normal Form (3NF), which prevented data duplication in multiple tables. But although a 3NF database was totally free of redundancy, the database queries could run very slowly because of the extra navigation required to access information. Over the 1980s and 1990s, database designers became more liberal with the introduction of redundant data to speed database queries.

The overall tuning approach

While there is no silver bullet for tuning Oracle unstructured code, a comprehensive approach can help ensure that all of the bases are covered and that no important tuning facts have been overlooked. When tuning the code, we have to start by taking a broad look at the overall client requirements and successively drilling down for more details.

PS: I am fully aware that this text is only a tiny fraction of what can be done, and that other people may choose different tools and actions, but the above gives you a very reasonable start at solving your performance problem.

To learn in more detail about Query optimization in Oracle please click here

Most Oracle experts generally use a top-down approach for tuning. This means that you start at a very high level, taking a look at the overall design of the code, and then successively drill down into more detail as you begin to tune the individual components within the code.

The goal of this article is to give you the general tools required to ensure that your Oracle code is performing at an optimal level. Although the article won't make you an expert, they'll give you a solid understanding of all the important Oracle tuning issues.

First of all, you have to know why it is slow. What is the real cause of your problem. If the reason why is not known, suggesting to rewrite the query, or hinting the query, suggesting parallellization is not very productive. Once in a while you may get lucky. But even then you have to realize that if your problem seems "solved", but you don't know why, nothing guarantees you that the problem won't come back tomorrow. So the first step should always be to investigate the root cause.

Some of the tools at your disposal are:

- dbms_profiler

- explain plan

- SQL*Trace / tkprof

dbms_profiler

Use dbms_profiler if you want to know where time is being spent in PL/SQL code. Statspack is a must if you are a dba and want to know what is going on in your entire database. For a single query or a small process, explain plan and SQL*Trace and tkprof are your tools.

Explain Plan

This is one of the best tip. When we find that there is a performance issue with the query we need to break the query into smaller chunks. This approach is best called as 'Divide and Rule'. To implement this we need to type the following in SQL*Plus:

explain plan for

select * from table(dbms_xplan.display);

When you get error messages or a message complaining about an old version of plan_table, make sure you run the script utlxplan.sql.

The output you get here basically shows you what the cost based optimizer expects. It gives you an idea on why the cost based optimizer chooses an access path.

SQL*Trace/tkprof

For this you have to type in SQL*Plus:

- alter session set sql_trace true;

-

- disconnect (this step is important, because it ensures all cursors get closed, and "row source operation" is generated)

- identify your trace file in the server directory as specified in the parameter user_dump_dest

- on your operating system: tkprof

The file a.txt will now give you valuable information on what has actually happened. No predictions but the truth.

By comparing the output from explain plan with the output from tkprof, you are able to identify the possible problem areas.

A note about database design and performance

The initial design of the Oracle tables and indexes is the single most critical factor in overall performance—and unfortunately, the design can rarely be changed once the system is placed into production use. So while the tuning techniques we'll be discussing can help you maximize the efficiency of your database engine, bear in mind that the initial design is the most important performance factor.

When a database is initially analyzed, the designer will often apply the normalization rules developed by E. F. Codd and C. J. Date. Their normalization study resulted in a set of table definitions that made it easier to design tables with controlled redundancy.

In the 1970s, database redundancy was difficult and expensive. As a result, database designers were taught to create databases in Third Normal Form (3NF), which prevented data duplication in multiple tables. But although a 3NF database was totally free of redundancy, the database queries could run very slowly because of the extra navigation required to access information. Over the 1980s and 1990s, database designers became more liberal with the introduction of redundant data to speed database queries.

The overall tuning approach

While there is no silver bullet for tuning Oracle unstructured code, a comprehensive approach can help ensure that all of the bases are covered and that no important tuning facts have been overlooked. When tuning the code, we have to start by taking a broad look at the overall client requirements and successively drilling down for more details.

PS: I am fully aware that this text is only a tiny fraction of what can be done, and that other people may choose different tools and actions, but the above gives you a very reasonable start at solving your performance problem.

To learn in more detail about Query optimization in Oracle please click here

Wednesday, August 13, 2008

Query that covers P2P life cycle

SELECT

A.ORG_ID “ORG ID”,

E.VENDOR_NAME “VENDOR NAME”,

UPPER(E.VENDOR_TYPE_LOOKUP_CODE) “VENDOR TYPE”,

F.VENDOR_SITE_CODE “VENDOR SITE”,

F.ADDRESS_LINE1 “ADDRESS”,

F.CITY “CITY”,

F.COUNTRY “COUNTRY”,

TO_CHAR(TRUNC(D.CREATION_DATE)) “PO DATE”,

D.SEGMENT1 “PO NUMBER”,

D.TYPE_LOOKUP_CODE “PO TYPE”,

C.QUANTITY_ORDERED “QTY ORDERED”,

C.QUANTITY_CANCELLED “QTY CANCALLED”,

G.ITEM_DESCRIPTION “ITEM DESCRIPTION”,

G.UNIT_PRICE “UNIT PRICE”,

(NVL(C.QUANTITY_ORDERED,0)-NVL(C.QUANTITY_CANCELLED,0))*NVL(G.UNIT_PRICE,0) “PO Line Amount”,

(SELECT

DECODE(PH.APPROVED_FLAG, ‘Y’, ‘Approved’)

FROM PO.PO_HEADERS_ALL PH

WHERE PH.PO_HEADER_ID = D.PO_HEADER_ID) “PO STATUS”,

A.INVOICE_TYPE_LOOKUP_CODE “INVOICE TYPE”,

A.INVOICE_AMOUNT “INVOICE AMOUNT”,

TO_CHAR(TRUNC(A.INVOICE_DATE)) “INVOICE DATE”,

A.INVOICE_NUM “INVOICE NUMBER”,

(SELECT

DECODE(X.MATCH_STATUS_FLAG, ‘A’, ‘Approved’)

FROM AP.AP_INVOICE_DISTRIBUTIONS_ALL X

WHERE X.INVOICE_DISTRIBUTION_ID = B.INVOICE_DISTRIBUTION_ID)”Invoice Approved?”,

A.AMOUNT_PAID,

H.AMOUNT,

I.CHECK_NUMBER “CHEQUE NUMBER”,

TO_CHAR(TRUNC(I.CHECK_DATE)) “PAYMENT DATE”

FROM AP.AP_INVOICES_ALL A,

AP.AP_INVOICE_DISTRIBUTIONS_ALL B,

PO.PO_DISTRIBUTIONS_ALL C,

PO.PO_HEADERS_ALL D,

PO.PO_VENDORS E,

PO.PO_VENDOR_SITES_ALL F,

PO.PO_LINES_ALL G,

AP.AP_INVOICE_PAYMENTS_ALL H,

AP.AP_CHECKS_ALL I

WHERE A.INVOICE_ID = B.INVOICE_ID

AND B.PO_DISTRIBUTION_ID = C. PO_DISTRIBUTION_ID (+)

AND C.PO_HEADER_ID = D.PO_HEADER_ID (+)

AND E.VENDOR_ID (+) = D.VENDOR_ID

AND F.VENDOR_SITE_ID (+) = D.VENDOR_SITE_ID

AND D.PO_HEADER_ID = G.PO_HEADER_ID

AND C.PO_LINE_ID = G.PO_LINE_ID

AND A.INVOICE_ID = H.INVOICE_ID

AND H.CHECK_ID = I.CHECK_ID

AND F.VENDOR_SITE_ID = I.VENDOR_SITE_ID

AND C.PO_HEADER_ID IS NOT NULL

AND A.PAYMENT_STATUS_FLAG = ‘Y’

AND D.TYPE_LOOKUP_CODE != ‘BLANKET’;

Note: To know more about P2P cycle, please navigate to the below link:

http://arunrathod.blogspot.com/2008/05/p2p-cycle.html

A.ORG_ID “ORG ID”,

E.VENDOR_NAME “VENDOR NAME”,

UPPER(E.VENDOR_TYPE_LOOKUP_CODE) “VENDOR TYPE”,

F.VENDOR_SITE_CODE “VENDOR SITE”,

F.ADDRESS_LINE1 “ADDRESS”,

F.CITY “CITY”,

F.COUNTRY “COUNTRY”,

TO_CHAR(TRUNC(D.CREATION_DATE)) “PO DATE”,

D.SEGMENT1 “PO NUMBER”,

D.TYPE_LOOKUP_CODE “PO TYPE”,

C.QUANTITY_ORDERED “QTY ORDERED”,

C.QUANTITY_CANCELLED “QTY CANCALLED”,

G.ITEM_DESCRIPTION “ITEM DESCRIPTION”,

G.UNIT_PRICE “UNIT PRICE”,

(NVL(C.QUANTITY_ORDERED,0)-NVL(C.QUANTITY_CANCELLED,0))*NVL(G.UNIT_PRICE,0) “PO Line Amount”,

(SELECT

DECODE(PH.APPROVED_FLAG, ‘Y’, ‘Approved’)

FROM PO.PO_HEADERS_ALL PH

WHERE PH.PO_HEADER_ID = D.PO_HEADER_ID) “PO STATUS”,

A.INVOICE_TYPE_LOOKUP_CODE “INVOICE TYPE”,

A.INVOICE_AMOUNT “INVOICE AMOUNT”,

TO_CHAR(TRUNC(A.INVOICE_DATE)) “INVOICE DATE”,

A.INVOICE_NUM “INVOICE NUMBER”,

(SELECT

DECODE(X.MATCH_STATUS_FLAG, ‘A’, ‘Approved’)

FROM AP.AP_INVOICE_DISTRIBUTIONS_ALL X

WHERE X.INVOICE_DISTRIBUTION_ID = B.INVOICE_DISTRIBUTION_ID)”Invoice Approved?”,

A.AMOUNT_PAID,

H.AMOUNT,

I.CHECK_NUMBER “CHEQUE NUMBER”,

TO_CHAR(TRUNC(I.CHECK_DATE)) “PAYMENT DATE”

FROM AP.AP_INVOICES_ALL A,

AP.AP_INVOICE_DISTRIBUTIONS_ALL B,

PO.PO_DISTRIBUTIONS_ALL C,

PO.PO_HEADERS_ALL D,

PO.PO_VENDORS E,

PO.PO_VENDOR_SITES_ALL F,

PO.PO_LINES_ALL G,

AP.AP_INVOICE_PAYMENTS_ALL H,

AP.AP_CHECKS_ALL I

WHERE A.INVOICE_ID = B.INVOICE_ID

AND B.PO_DISTRIBUTION_ID = C. PO_DISTRIBUTION_ID (+)

AND C.PO_HEADER_ID = D.PO_HEADER_ID (+)

AND E.VENDOR_ID (+) = D.VENDOR_ID

AND F.VENDOR_SITE_ID (+) = D.VENDOR_SITE_ID

AND D.PO_HEADER_ID = G.PO_HEADER_ID

AND C.PO_LINE_ID = G.PO_LINE_ID

AND A.INVOICE_ID = H.INVOICE_ID

AND H.CHECK_ID = I.CHECK_ID

AND F.VENDOR_SITE_ID = I.VENDOR_SITE_ID

AND C.PO_HEADER_ID IS NOT NULL

AND A.PAYMENT_STATUS_FLAG = ‘Y’

AND D.TYPE_LOOKUP_CODE != ‘BLANKET’;

Note: To know more about P2P cycle, please navigate to the below link:

http://arunrathod.blogspot.com/2008/05/p2p-cycle.html

Tuesday, August 12, 2008

Types of table extensions in Oracle

_ALL : Table holds all the information about different operating units. Multi-Org environment. You can also set the client_info to specific operating unit to see the data specific to that operating unit only.

_TL are tables corresponding to another table with the same name minus the _TL. These tables provide multiple language support. For each item in the table without _TL there can be many rows in the _TL table, but all with different values in the LANGUAGE column.

_B these are the BASE tables.

They are very important and the data is stored in the table with all validations.

It is supposed that these table will always contain the perfect format data.

If anything happens to the BASE table data, then it is a data corruption issue.

_F these are date tracked tables, which occur in HR and Payroll. For these there are two date columns EFFECTIVE_START_DATE and EFFECTIVE_END_DATE which together with the PK identifies a row uniquely. The date intervals cannot overlap. Many think they are Secured data. Guess someone from Oracle confirms.

_V tables are the views created on base tables

_VL are views for multi language tables which combines the row of the base table with the corresponding row of the _TL table where the LANGUAGE = USERENV(’LANG’).

_S are sequences, used for finding new values for the primary key of a table.

_A are Audit Shadow Tables

_AVN and _ACN are Audit Shadow Views (when data was changed, and with what values

_TL are tables corresponding to another table with the same name minus the _TL. These tables provide multiple language support. For each item in the table without _TL there can be many rows in the _TL table, but all with different values in the LANGUAGE column.

_B these are the BASE tables.

They are very important and the data is stored in the table with all validations.

It is supposed that these table will always contain the perfect format data.

If anything happens to the BASE table data, then it is a data corruption issue.

_F these are date tracked tables, which occur in HR and Payroll. For these there are two date columns EFFECTIVE_START_DATE and EFFECTIVE_END_DATE which together with the PK identifies a row uniquely. The date intervals cannot overlap. Many think they are Secured data. Guess someone from Oracle confirms.

_V tables are the views created on base tables

_VL are views for multi language tables which combines the row of the base table with the corresponding row of the _TL table where the LANGUAGE = USERENV(’LANG’).

_S are sequences, used for finding new values for the primary key of a table.

_A are Audit Shadow Tables

_AVN and _ACN are Audit Shadow Views (when data was changed, and with what values

Apps Documentation

Here is the one stop place for all documentation for Oracle Application.

http://www.oracle.com/technology/documentation/applications.html

http://www.oracle.com/technology/documentation/applications.html

Oracle AIM Document Templates

1. Business Process Architecture (BP)

BP.010 Define Business and Process Strategy

BP.020 Catalog and Analyze Potential Changes

BP.030 Determine Data Gathering Requirements

BP.040 Develop Current Process Model

BP.050 Review Leading Practices

BP.060 Develop High-Level Process Vision

BP.070 Develop High-Level Process Design

BP.080 Develop Future Process Model

BP.090 Document Business Procedure

2. Business Requirements Definition (RD)

RD.010 Identify Current Financial and Operating Structure

RD.020 Conduct Current Business Baseline

RD.030 Establish Process and Mapping Summary

RD.040 Gather Business Volumes and Metrics

RD.050 Gather Business Requirements

RD.060 Determine Audit and Control Requirements

RD.070 Identify Business Availability Requirements

RD.080 Identify Reporting and Information Access Requirements

3. Business Requirements Mapping

BR.010 Analyze High-Level Gaps

BR.020 Prepare mapping environment

BR.030 Map Business requirements

BR.040 Map Business Data

BR.050 Conduct Integration Fit Analysis

BR.060 Create Information Model

BR.070 Create Reporting Fit Analysis

BR.080 Test Business Solutions

BR.090 Confirm Integrated Business Solutions

BR.100 Define Applications Setup

BR.110 Define security Profiles

4. Application and Technical Architecture (TA)

TA.010 Define Architecture Requirements and Strategy

TA.020 Identify Current Technical Architecture

TA.030 Develop Preliminary Conceptual Architecture

TA.040 Define Application Architecture

TA.050 Define System Availability Strategy

TA.060 Define Reporting and Information Access Strategy

TA.070 Revise Conceptual Architecture

TA.080 Define Application Security Architecture

TA.090 Define Application and Database Server Architecture

TA.100 Define and Propose Architecture Subsystems

TA.110 Define System Capacity Plan

TA.120 Define Platform and Network Architecture

TA.130 Define Application Deployment Plan

TA.140 Assess Performance Risks

TA.150 Define System Management Procedures

5. Module Design and Build (MD)

MD.010 Define Application Extension Strategy

MD.020 Define and estimate application extensions

MD.030 Define design standards

MD.040 Define Build Standards

MD.050 Create Application extensions functional design

MD.060 Design Database extensions

MD.070 Create Application extensions technical design

MD.080 Review functional and Technical designs

MD.090 Prepare Development environment

MD.100 Create Database extensions

MD.110 Create Application extension modules

MD.120 Create Installation routines

6. Data Conversion (CV)

CV.010 Define data conversion requirements and strategy

CV.020 Define Conversion standards

CV.030 Prepare conversion environment

CV.040 Perform conversion data mapping

CV.050 Define manual conversion procedures

CV.060 Design conversion programs

CV.070 Prepare conversion test plans

CV.080 Develop conversion programs

CV.090 Perform conversion unit tests

CV.100 Perform conversion business objects

CV.110 Perform conversion validation tests

CV.120 Install conversion programs

CV.130 Convert and verify data

7. Documentation (DO)

DO.010 Define documentation requirements and strategy

DO.020 Define Documentation standards and procedures

DO.030 Prepare glossary

DO.040 Prepare documentation environment

DO.050 Produce documentation prototypes and templates

DO.060 Publish user reference manual

DO.070 Publish user guide

DO.080 Publish technical reference manual

DO.090 Publish system management guide

8. Business System Testing (TE)

TE.010 Define testing requirements and strategy

TE.020 Develop unit test script

TE.030 Develop link test script

TE.040 Develop system test script

TE.050 Develop systems integration test script

TE.060 Prepare testing environments

TE.070 Perform unit test

TE.080 Perform link test

TE.090 perform installation test

TE.100 Prepare key users for testing

TE.110 Perform system test

TE.120 Perform systems integration test

TE.130 Perform Acceptance test

9. PERFORMACE TESTING(PT)

PT.010 - Define Performance Testing Strategy

PT.020 - Identify Performance Test Scenarios

PT.030 - Identify Performance Test Transaction

PT.040 - Create Performance Test Scripts

PT.050 - Design Performance Test Transaction Programs

PT.060 - Design Performance Test Data

PT.070 - Design Test Database Load Programs

PT.080 - Create Performance Test TransactionPrograms

PT.090 - Create Test Database Load Programs

PT.100 - Construct Performance Test Database

PT.110 - Prepare Performance Test Environment

PT.120 - Execute Performance Test

10. Adoption and Learning (AP)

AP.010 - Define Executive Project Strategy

AP.020 - Conduct Initial Project Team Orientation

AP.030 - Develop Project Team Learning Plan

AP.040 - Prepare Project Team Learning Environment

AP.050 - Conduct Project Team Learning Events

AP.060 - Develop Business Unit Managers’Readiness Plan

AP.070 - Develop Project Readiness Roadmap

AP.080 - Develop and Execute CommunicationCampaign

AP.090 - Develop Managers’ Readiness Plan

AP.100 - Identify Business Process Impact onOrganization

AP.110 - Align Human Performance SupportSystems

AP.120 - Align Information Technology Groups

AP.130 - Conduct User Learning Needs Analysis

AP.140 - Develop User Learning Plan

AP.150 - Develop User Learningware

AP.160 - Prepare User Learning Environment

AP.170 - Conduct User Learning Events

AP.180 - Conduct Effectiveness Assessment

11. Production Migration (PM)

PM.010 - Define Transition Strategy

PM.020 - Design Production Support Infrastructure

PM.030 - Develop Transition and Contingency Plan

PM.040 - Prepare Production Environment

PM.050 - Set Up Applications

PM.060 - Implement Production Support Infrastructure

PM.070 - Verify Production Readiness

PM.080 - Begin Production

PM.090 - Measure System Performance

PM.100 - Maintain System

PM.110 - Refine Production System

PM.120 - Decommission Former Systems

PM.130 - Propose Future Business Direction

PM.140 - Propose Future Technical Direction

BP.010 Define Business and Process Strategy

BP.020 Catalog and Analyze Potential Changes

BP.030 Determine Data Gathering Requirements

BP.040 Develop Current Process Model

BP.050 Review Leading Practices

BP.060 Develop High-Level Process Vision

BP.070 Develop High-Level Process Design

BP.080 Develop Future Process Model

BP.090 Document Business Procedure

2. Business Requirements Definition (RD)

RD.010 Identify Current Financial and Operating Structure

RD.020 Conduct Current Business Baseline

RD.030 Establish Process and Mapping Summary

RD.040 Gather Business Volumes and Metrics

RD.050 Gather Business Requirements

RD.060 Determine Audit and Control Requirements

RD.070 Identify Business Availability Requirements

RD.080 Identify Reporting and Information Access Requirements

3. Business Requirements Mapping

BR.010 Analyze High-Level Gaps

BR.020 Prepare mapping environment

BR.030 Map Business requirements

BR.040 Map Business Data

BR.050 Conduct Integration Fit Analysis

BR.060 Create Information Model

BR.070 Create Reporting Fit Analysis

BR.080 Test Business Solutions

BR.090 Confirm Integrated Business Solutions

BR.100 Define Applications Setup

BR.110 Define security Profiles

4. Application and Technical Architecture (TA)

TA.010 Define Architecture Requirements and Strategy

TA.020 Identify Current Technical Architecture

TA.030 Develop Preliminary Conceptual Architecture

TA.040 Define Application Architecture

TA.050 Define System Availability Strategy

TA.060 Define Reporting and Information Access Strategy

TA.070 Revise Conceptual Architecture

TA.080 Define Application Security Architecture

TA.090 Define Application and Database Server Architecture

TA.100 Define and Propose Architecture Subsystems

TA.110 Define System Capacity Plan

TA.120 Define Platform and Network Architecture

TA.130 Define Application Deployment Plan

TA.140 Assess Performance Risks

TA.150 Define System Management Procedures

5. Module Design and Build (MD)

MD.010 Define Application Extension Strategy

MD.020 Define and estimate application extensions

MD.030 Define design standards

MD.040 Define Build Standards

MD.050 Create Application extensions functional design

MD.060 Design Database extensions

MD.070 Create Application extensions technical design

MD.080 Review functional and Technical designs

MD.090 Prepare Development environment

MD.100 Create Database extensions

MD.110 Create Application extension modules

MD.120 Create Installation routines

6. Data Conversion (CV)

CV.010 Define data conversion requirements and strategy

CV.020 Define Conversion standards

CV.030 Prepare conversion environment

CV.040 Perform conversion data mapping

CV.050 Define manual conversion procedures

CV.060 Design conversion programs

CV.070 Prepare conversion test plans

CV.080 Develop conversion programs

CV.090 Perform conversion unit tests

CV.100 Perform conversion business objects

CV.110 Perform conversion validation tests

CV.120 Install conversion programs

CV.130 Convert and verify data

7. Documentation (DO)

DO.010 Define documentation requirements and strategy

DO.020 Define Documentation standards and procedures

DO.030 Prepare glossary

DO.040 Prepare documentation environment

DO.050 Produce documentation prototypes and templates

DO.060 Publish user reference manual

DO.070 Publish user guide

DO.080 Publish technical reference manual

DO.090 Publish system management guide

8. Business System Testing (TE)

TE.010 Define testing requirements and strategy

TE.020 Develop unit test script

TE.030 Develop link test script

TE.040 Develop system test script

TE.050 Develop systems integration test script

TE.060 Prepare testing environments

TE.070 Perform unit test

TE.080 Perform link test

TE.090 perform installation test

TE.100 Prepare key users for testing

TE.110 Perform system test

TE.120 Perform systems integration test

TE.130 Perform Acceptance test

9. PERFORMACE TESTING(PT)

PT.010 - Define Performance Testing Strategy

PT.020 - Identify Performance Test Scenarios

PT.030 - Identify Performance Test Transaction

PT.040 - Create Performance Test Scripts

PT.050 - Design Performance Test Transaction Programs

PT.060 - Design Performance Test Data

PT.070 - Design Test Database Load Programs

PT.080 - Create Performance Test TransactionPrograms

PT.090 - Create Test Database Load Programs

PT.100 - Construct Performance Test Database

PT.110 - Prepare Performance Test Environment

PT.120 - Execute Performance Test

10. Adoption and Learning (AP)

AP.010 - Define Executive Project Strategy

AP.020 - Conduct Initial Project Team Orientation

AP.030 - Develop Project Team Learning Plan

AP.040 - Prepare Project Team Learning Environment

AP.050 - Conduct Project Team Learning Events

AP.060 - Develop Business Unit Managers’Readiness Plan

AP.070 - Develop Project Readiness Roadmap

AP.080 - Develop and Execute CommunicationCampaign

AP.090 - Develop Managers’ Readiness Plan

AP.100 - Identify Business Process Impact onOrganization

AP.110 - Align Human Performance SupportSystems

AP.120 - Align Information Technology Groups

AP.130 - Conduct User Learning Needs Analysis

AP.140 - Develop User Learning Plan

AP.150 - Develop User Learningware

AP.160 - Prepare User Learning Environment

AP.170 - Conduct User Learning Events

AP.180 - Conduct Effectiveness Assessment

11. Production Migration (PM)

PM.010 - Define Transition Strategy

PM.020 - Design Production Support Infrastructure

PM.030 - Develop Transition and Contingency Plan

PM.040 - Prepare Production Environment

PM.050 - Set Up Applications

PM.060 - Implement Production Support Infrastructure

PM.070 - Verify Production Readiness

PM.080 - Begin Production